The Google Way of Science - Kevin Kelly @ The Technium

The Google Way of Science - Kevin Kelly @ The Technium

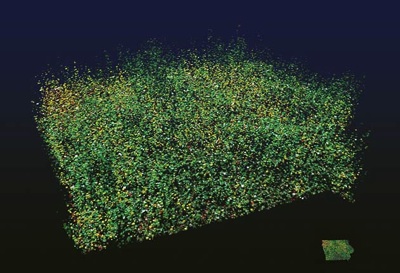

This is a wildly interesting article on the rise of petabyte levels of data can lead to a way of making predicable observations without an overarching theory or model. Kelly argues that such methods already exist and are employed by search engines like Google or the mass of data coming from radio telescope observations. What Kelly argues is that with a large enough data set one can develop a method of determining the probable outcome of an event as gathered from certain consistencies within the mass data. Pivoting off another article by Chris Anderson at Wired, Kelly points to the use of correlative data as the means by which Google is able to create highly competent translations of works written in different languages without the need for human translators or a model of translation. Simply gather enough data points and you can write an algorithm that produces such roughly accurate translations.

What both Kelly and Anderson argue is with enough data, an a priori hypothesis or a postiori theory is not necessary to make accurate predictions. And as both point out, such an argument radically changes the way we can learn in nearly any field. Where Kelly and Anderson diverge is over whether this argument means the end of theory or simply a change in how theories and models are created.

One point against this argument is W.V.O. Quine's indeterminacy of translation argument. In essence, Quine argues that the range of meaning in an unknown language is large enough that errors in translation are a given. Within a set of data points there might exist certain points of data that when added to the set break the existing model of explanation. We've already seen such a break occur between Newtonian physics and Einsteinian physics. Newton's theory of motion, while still useful in the everyday sense, cannot explain the new data points added since the development of his theory. Einstein was able to offer a new model that did explain both the old data set as well as the new data points.

Quine's indeterminacy theory, much like Heidegger's argument against the 'clearing of all clearings' means that no matter how good a model we construct new data points that the model cannot explain are always possible.

I have to say I have mixed feelings on the idea of predictable observations without an explanatory model. Certainly no model is perfect and one should always place primacy on evidence over theory. Yet, I agree with Kelly that Anderson goes too far with his thesis that cloud data is enough to end the need for theory. Kelly believes that we simply are reaching the limits of human logic and conception. I believe that we are finally reaching the end of our mental childhood. It's only been a little over eighty-years since Heisenberg introduced the uncertainty principle into quantum mechanics. Add to this the wave/particle duality of light, quantum superpositions, and the infinitely brief existence of virtual particles and you have a collection of ideas, thought experiments and theories that are highly difficult to understand but are understandable. So Kelly's assertion that we've arrivied at the end of human conception means, I believe, that we simply have to stretch human conception further.

Tuesday, July 01, 2008

Cloud Theory

at

11:15 PM

![]()